Conversational Qestion Generation using Large Language Models

Proposes a three-stacked LLM model that utilizes in-context learning capabilities to generate context-aware conversational follow-up questions.A/B experiments with 33 middle school students showed a 13% learning improvement compared to students who did not answer any follow-up questions.

The work has been published in 25th International Conference, AIED 2024, acceptance rate: 25% out of 334 submissions. Paper link

In this project, we leverage the in-context capabilities of Large Language Models (LLMs) to generate conversational history and follow-up questions focused on ideal responses, helping students learn by answering these questions.

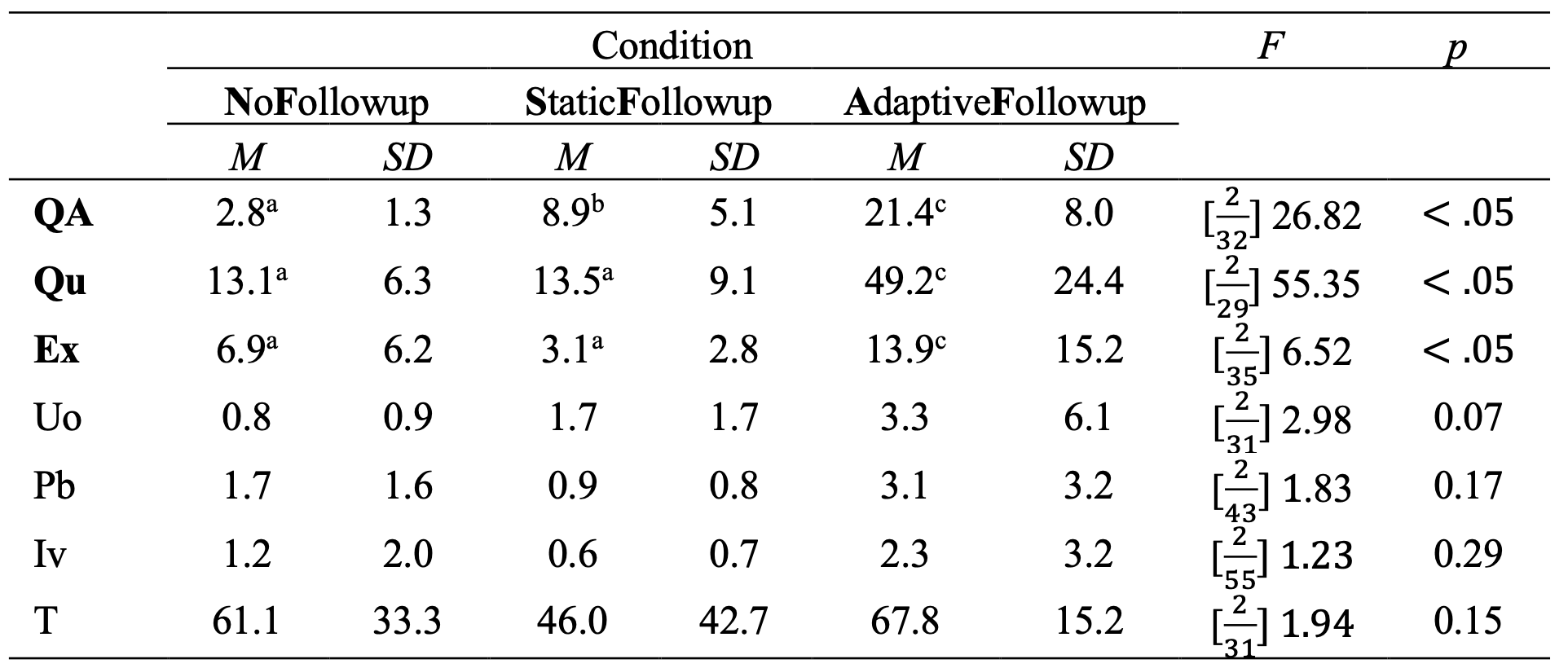

We compare student interaction logs and test data across three conditions:

- NoFollowUp Condition: Students did not answer any follow-up questions.

- StaticFollowUp Condition: Students answered canned or scripted follow-up questions.

- AdaptiveFollowUp Condition: Students answered follow-up questions generated by our proposed model, ExpectAdapt.

The experiment demonstrates that students spent more time answering adaptive follow-up questions, which correlated with improved problem-solving accuracy in the Intelligent Tutoring System. This approach further helped students achieve higher learning gains while working on significantly fewer problems.