Metadata Extraction from Educational Videos using Audio Transcript

This project aims to extract metadata from videos using audio transcripts to predict their usefulness based on popularity and educational values.

This project was completed during a week-long summer school organized by LearnLab at Carnegie Mellon University.

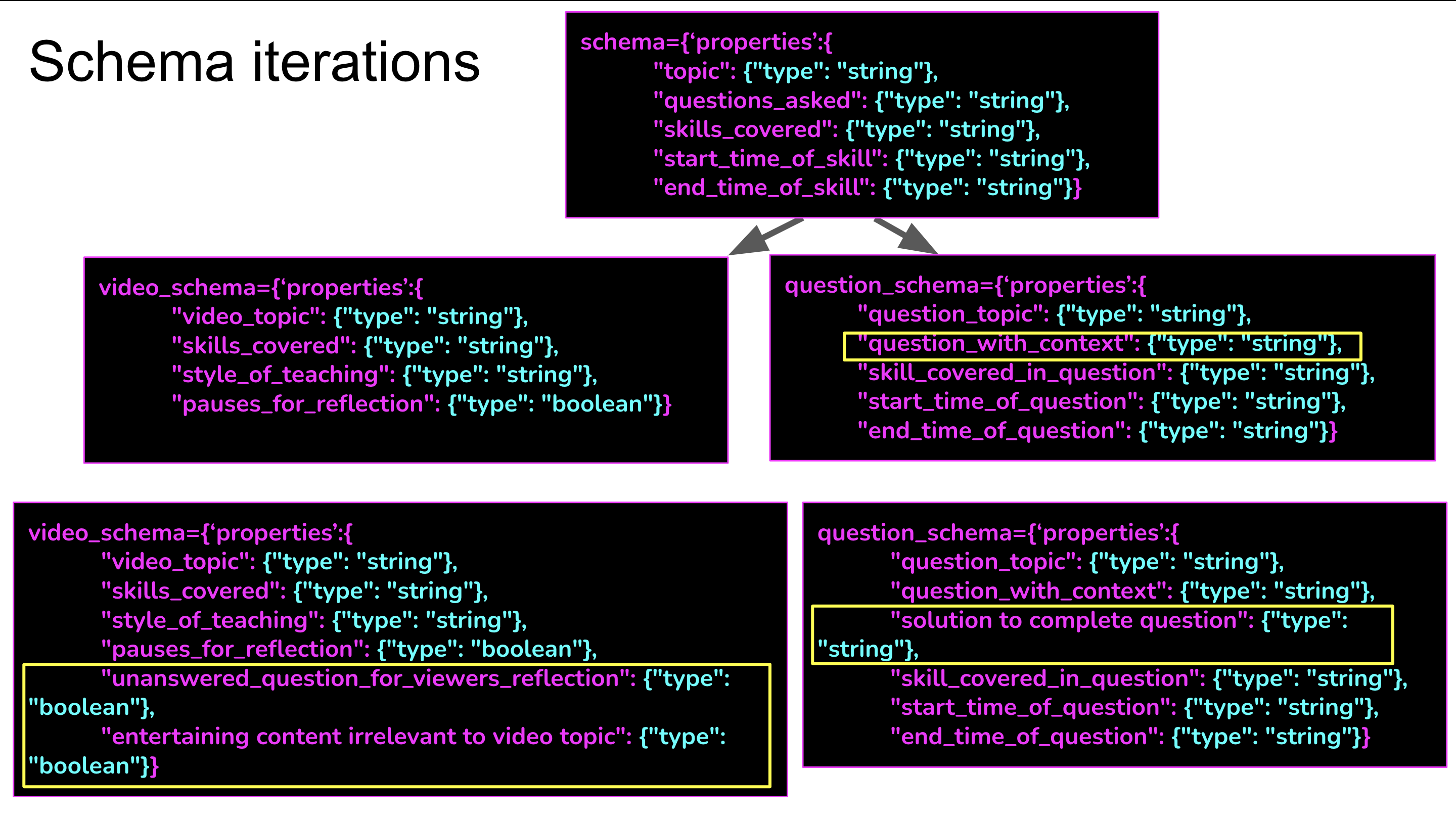

In this project, we use LangChain to prompt GPT-3.5-Turbo model through schema iterations.

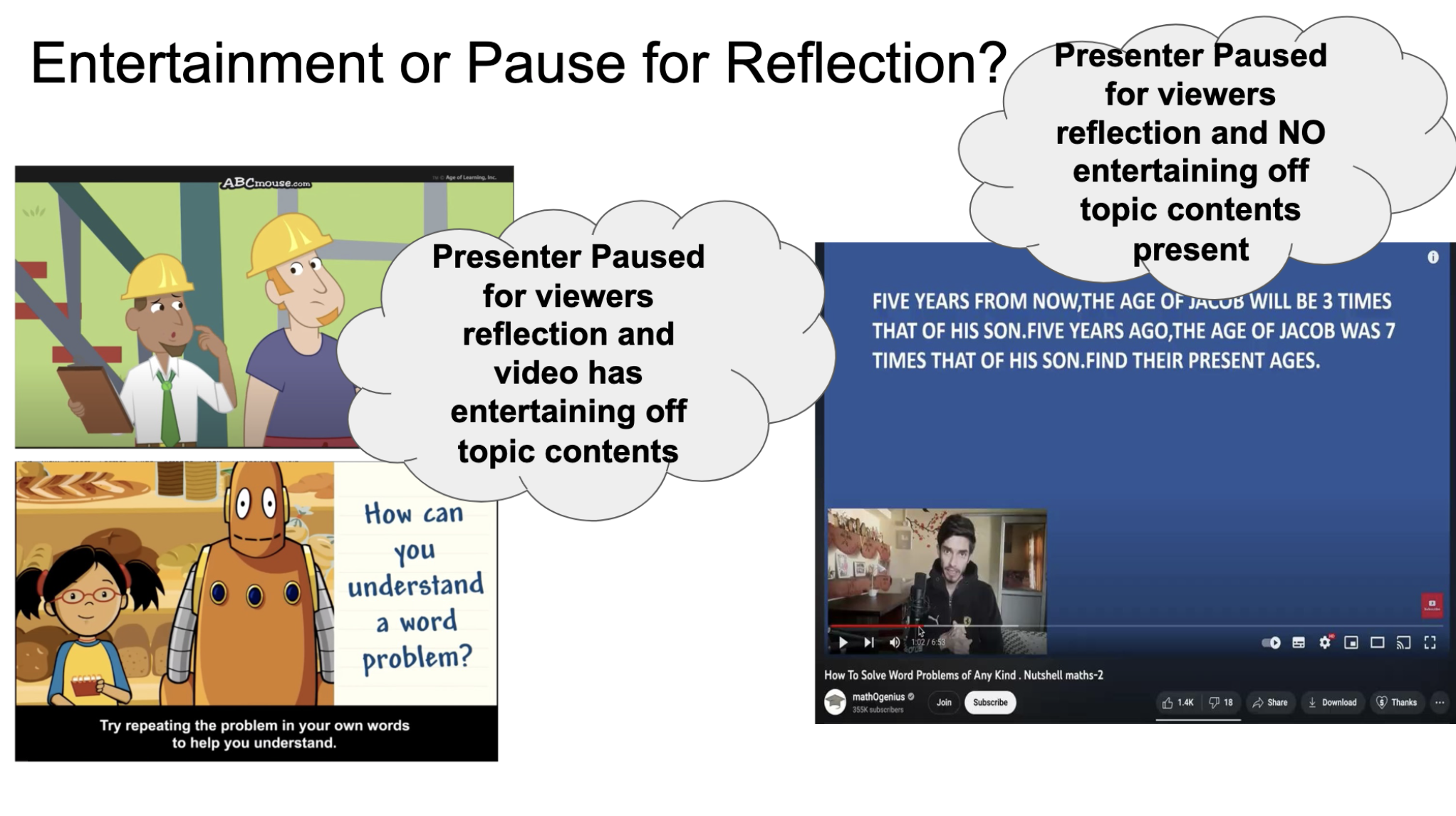

One of our findings from the qualitative analysis is that LLMs are capable of extracting features of a video using its transcript when prompted using LangChain schema. Among other features, LLMs demonstrated proficiency in identifying whether a video includes pauses for viewer reflection or contains entertaining elements from just using the audio transcript of the video.

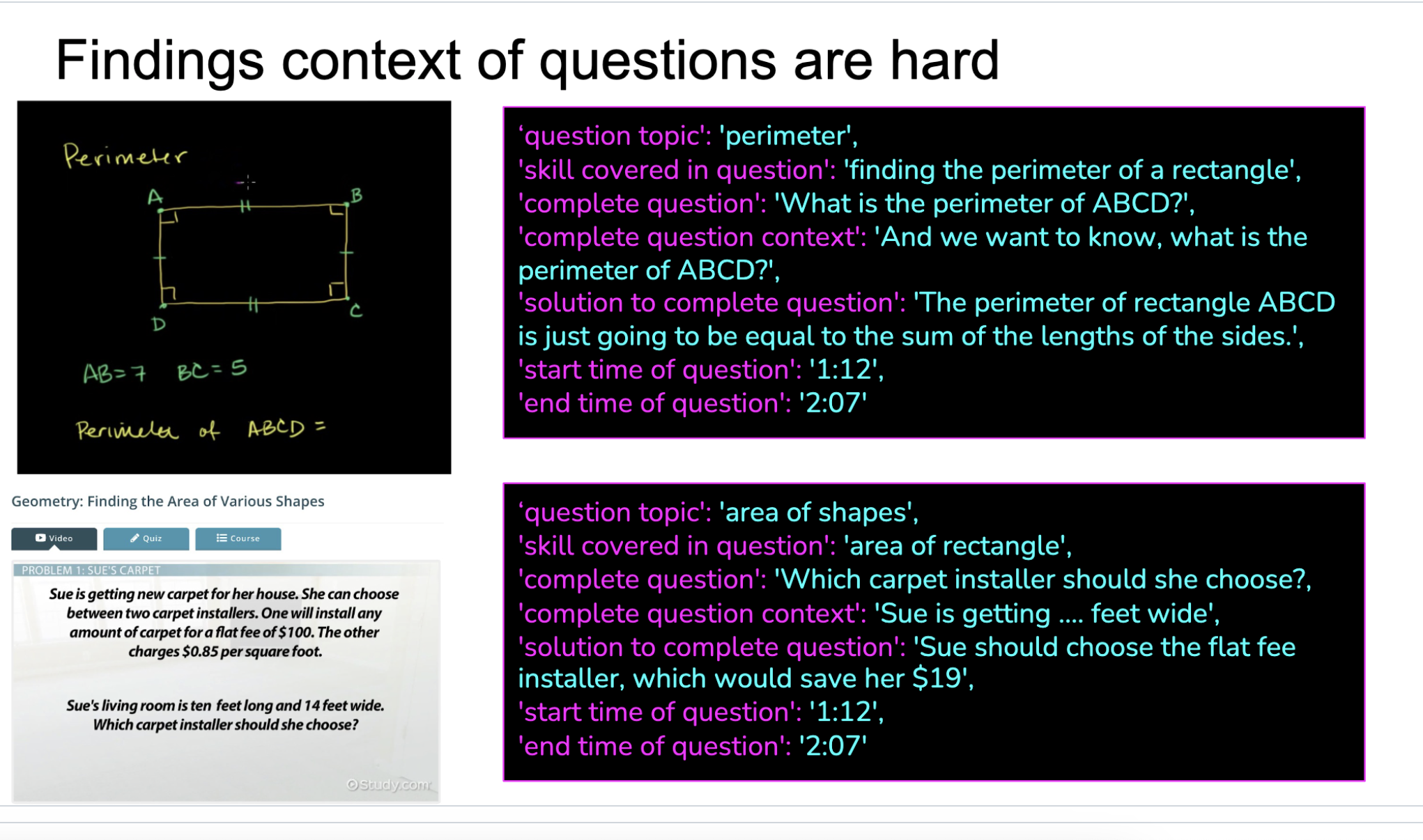

Our analysis also uncovered challenges faced by LLMs, particularly in detecting context of the questions presented by the speaker in the video based solely on the transcript. This highlights an area for improvement in how LLMs process and understand spoken content.